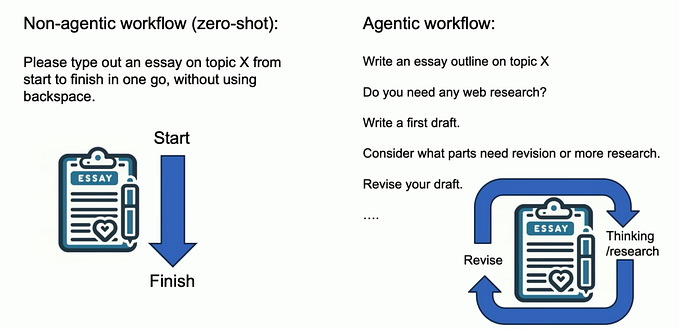

The function calling API from OpenAI demonstrates the potential of using LLMs to solve dialog understanding under a zero-shot learning setting with just a function specification and their parameters.

OpenAI’s function calling API can not solve dialog understanding

Despite the promised developer experience, the current API definition falls short of meeting production requirements. No, but the problem is not about the accuracy. While the accuracy is not there yet, it is believable that OpenAI will be able to improve the quality of their model so that accuracy can potentially improve over the time. But before that day, there are some fundamental issues with using OpenAI’s function calling API to solve dialog understanding:

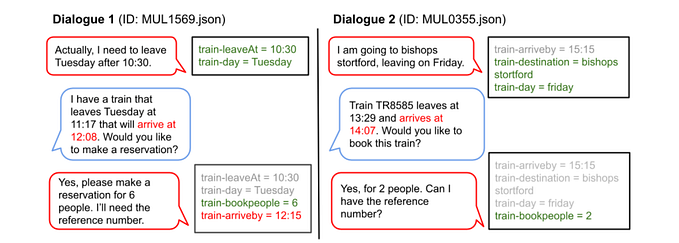

- It is common for users not to supply complete information needed to call a function. When that happens, the remaining user preferences will often be in response to a chatbot prompt, where they only provide information to fill the parameter slot, without unnecessarily specifying the function they want to call. So we need an API that can provide understanding for this use case.

- No easy way to fix the potential understanding issue. No matter how much improvement OpenAI makes to their function calling APIs, their model will always make mistakes. When the mistakes their model makes are high risk or high impact for businesses, businesses need a good way to fix that easily.

- LLMs generally only have information that is encoded in the text dataset from the public domain. Even when there is business private information that can help the conversion to text-to-json, for example, a list of values for a parameter, there is no good way to provide it to the function calling API in its current form.

- There is no built-in support for conversation functions, which are used to control the conversation state, such as closing a session, changing slot values, handing off the conversation to a live agent, etc. These conversation functions are critical for natural and effective conversations in every single CUI application. It is important to note that these conversation functions are not business-related, so it should be solved once and for all developers without their involvement.

Let’s design an usable API to fix all these known issues.

It turns out it is not hard to fix function calling API to a point that we can use as dialog understanding module. Of course, we still want to be able to provide this text-to-json capability with just function specification, but it should be clear it should follow a naming convention for slot/parameter that is tokenization friendly, such as snake case. It is better however to add name field (human readable form), with a list of string as its value as follows:

{ // the example function specification, separating label from name.

"label": "get_current_weather",

"name": ["get current weather"],

"description": "Get the current weather in a given location",

"parameters": {

"type": "object", // this can be custom type.

"properties": {

"departing_city": {

"type": "string",

"name": ["the departing city"],

"description": "The city and state, e.g. San Francisco, CA",

},

"unit": {

"type": "string",

"name": ["unit"],

"enum": ["celsius", "fahrenheit"]},

},

"required": ["location"],

},

} To make it possible for developers to fix understanding errors, we introduce can be easily fixed, we can take exemplars that attached to functions in the following format:

{ // the examle for examplars attached to some function.

'funcId' : "service/api/{get/post}", // Something identifies the funtion.

'exemplars' : [{

'type' : 'implies',

// slot_label can be departing_city as above.

'utterance' : 'Like to start from < slot_label > instead',

}]

}To make the API useful for understanding user utterance in the subsequent conversation turns around a functionality, we add a dialog expectation to convert function, which is a list of active slot and its corresponding function. Furthermore, to make it possible for developer to supply hints on the potential entities occurred in the input utterance.

from opencui import Converter

// this create the converter object

converter= Converter(huggingface_model_path: str)

// One can add more than one specs.

converter.add_function_specs(specs)

// One can add more than one spaces.

converter.add_exemplars(exemplars)

// This should persist to disk.

converter.finalize(path_to_persist).

// load from the disk.

du = converter.load_from_disk(path_to_persist)

callables = du.convert(

// what user said.

utterance: str,

// for each slot type, the list spans for its candiate values.

entities: dict[str, list[tuple[int,]]],

// the current active function topic, in list of (slot, function)

expections: list[tuple[str, str]]) Here, ‘entities’ allow developer to mark the candidate value for the slots involved, and expectation captured the active frames (function calls) as conversational context. Of course, we can make the return to have the same format of OpenAI’s function calling, so that you can do a drop-in replacement.

Implication for supporting conversation functions

Precisely recognizing conversation function, like ‘abort_intent’ or ‘change_slot_values’, are crucial for dialog management. To support these functions properly, we need to support generic type in function specification. For example:

{ // the example function specification, separating label from name.

"label": "change_slot_value",

"name": ["change_slot_value"],

"description": "change old <real_slot_label> to new <real_slot_label>",

"parameters": {

"type": "object", // this can be custom type.

"properties": {

"old_value": {

"type": "slot_label",

"description": "The old value for the <slot_label>",

},

"new_value": {

"type": "slot_label",

"description": "The new value for the <slot_label>",

},

},

}Given the spelled-out nature of LLMs, it is necessary for API implementation to instantiate these conversation functions for every slot required by the chatbot. This way, the chatbot can generate the appropriate calls for these special functions.

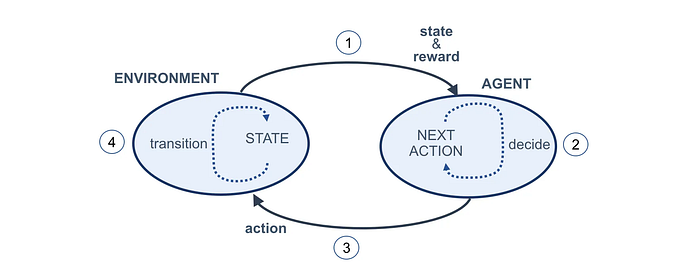

Furthermore, to work under a dual process setup, it is important for dialog understanding (DU) to generate a ‘do_not_understand’ output for all the user utterances that it does not understand.”

Parting words

There are other practical issues with the original OpenAI API, as it requires developers to send function specifications as context. When dealing with many functions, this can become problematic in terms of cost or if it exceeds the maximum context length permitted by the model. However, the API proposed here does not require developers to include function specifications in each call, effectively sidestepping this issue.

The function calling API from OpenAI is great for function calling under single-turn, command-style interactions. However, the API design lacks some fundamental considerations for full-fledged multi-turn conversations. This blog designs the necessary tweaks that can fix all these existing issues and, when implemented, can be directly used as a dialog understanding module. If you want to try our implementation of this API design, feel free to drop a note.

References: