In Sequoia Capital’s AI Ascent 2024 opening remarks, an interesting statement is made: “Because of the ability to interact with users in a human-like manner, one of the significant opportunities for AI is to replace services with software.” However, it has been more than 18 months since we can access common knowledge conversationally using instruction-tuned language models like ChatGPT, but there are still not many usable conversational services. What is preventing businesses from transitioning to this magical user experience? To answer this question, let’s start with why building conversational user interface (CUI) is hard.

A CUI is harder to build than a GUI

Interacting with graphical user interfaces (GUI)s is a skill that humans acquire through practice, meaning that what constitutes a good or bad experience is largely shaped by existing implementations. Grounding interaction in semantics is straightforward: an input box labeled “destination” is there to collect the choice of destination. Furthermore, users can only interact with applications in ways that are developed for them. As long as every interaction leads to a meaningful state, even when a GUI experience covers only the typical happy path, it is considered acceptable. Thus it is not too much work for GUI developers to meet the expectations of their target users. With CUIs, things have changed.

Dialog understanding is tricky

Even people who speak the same language have learned it slightly differently due to their upbringing. Therefore, natural language we speak are messy. We can express the same meaning in different ways:

1. I will take the white one.

2. I like the white one.

3. The white one looks better.We learned how to pick the right interpretation of phrases that can mean different things in different contexts:

1. The Apple stock just went through the roof.

2. We have enough apples in stock.We can calculate the implied meaning given the context easily:

Waiter: Our level 5 is too spicy for most people, are you sure you want it?

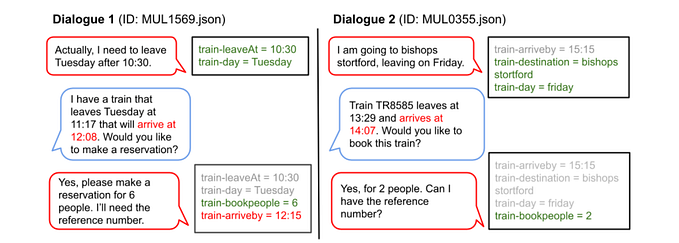

User: I lived in Sichuan for 5 years. (Implied Yes).Dialog understanding, a process that grounds natural text onto canonical semantics with respect to context, can thus be a tricky problem. Historically it has been addressed as some supervised machine learning problems, commonly known as intent classification and slot filling. Solving these problems require a sizable labeled example set for every new skill we wanted to expose to users conversationally, which could take a long time and can be very costly. With LLMs, which is pretrained on a huge amount of text, the number of labeled examples needed to reach the same level of performance has greatly reduced, and nowadays it is much easier to support new skills given the zero/few-shot capability of instruction-tuned LLMs.

User will take the liberty

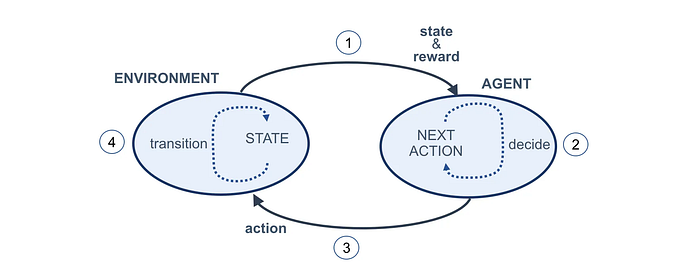

When we, as users, can say anything we see fit at any given turn, we will do it. While we don’t modify the destination on a date picker page in a GUI setting because we can’t, we can and will make such a destination modification request when we are asked for the departure date in a CUI setting. After all, we grow up expecting the other party to respond to our detour questions properly. While we can adjust our expectations when interacting with software, how effectively the other person can help us achieve our goals will always serve as the reference point. Deviating from that experience too much will lose us.

While it is acceptable for a chatbot or agent to say “sorry, I don’t understand” to irrelevant user queries, such a response to relevant user input can quickly erode the user’s confidence in the chatbot, leading them to demand communication with a real person or abandon the interaction altogether. Unfortunately, defining how a chatbot should respond properly to every relevant user input in this paradigm requires significant upfront effort from developers. The resulting prohibitively high cost could be the main reason why usable conversational services are not widespread.

Businesses need to inject their consideration into interaction

The primary reasons for businesses to develop conversational services are to reduce friction in serving users and to take advantage of potential up-selling opportunities. In other words, the designed conversation should not only assist users but also help businesses in achieving their goals. This means businesses might require precise control of the bot’s response at any given turn, as well as the ability to connect relevant user input to the APIs that the business operates on. Here, API integration is crucial not only because it is necessary for service fulfillment but also because it is important for interaction design.

To illustrate this, consider a scenario where a user expresses interest in seeing ‘Star Wars’. As a movie ticketing chatbot, instead of immediately asking the user for their preferred showtime, it would be beneficial to first check if there are still available tickets for any showings. Prompting the user for their showtime preference, only to later inform them that all shows are sold out, creates a poor user experience. Of course, business want to take every opportunity to improve their bottom line, usually in a differentiated way.

And do it in a cost effective way

After 40 years of trial and error, we have gathered a wealth of best practices for building GUIs. Thanks to these lessons learned, we have converged to some rather universal conceptual models/frameworks/libraries that are quiet expressive but do not need a Ph.D. to pick up. As a result, almost any business can now afford to hire enough frontend talent, so that they can develop functional web or mobile apps (often in house) to serve their users through graphical user interface. This makes it possible for businesses to continuously update their GUI experiences, and contributes to widespread availability of effective GUI applications.

To make CUI applications as prevalent as GUI applications, we need to reduce the cost of developing them. To achieve this, we must develop powerful yet easy-to-master conceptual models (flow is not it), frameworks, and libraries, along with best practices, so that existing business application development teams can successfully deliver the CUI experience as they do for GUI experience. This way, businesses can afford continuous updates to the user experience in response to changing conditions.

LLM might not be enough

ChatGPT has demonstrated that LLM allows for a conversational way to access valuable static knowledge buried in unstructured text. One might hope that it should now be easy to build a conversational user interface for existing service APIs with LLMs. That is why Bill Gates hails LLMs as the next great innovation after Windows (GUI).

Unfortunately, many of us got carried away by prompt engineering. Prompt engineering is a useful way to use natural language, or prompts, to influence LLM behavior for soft use cases, where either the problem itself is open-ended or the solution does not need to be accurate. But prompts will not provide precise control needed by hard use cases because they lack well-defined semantics; Furthermore, the same prompt can result in different behaviors, even when using different versions of the same model, making it hard to maintain the consistent experience while growing the software.

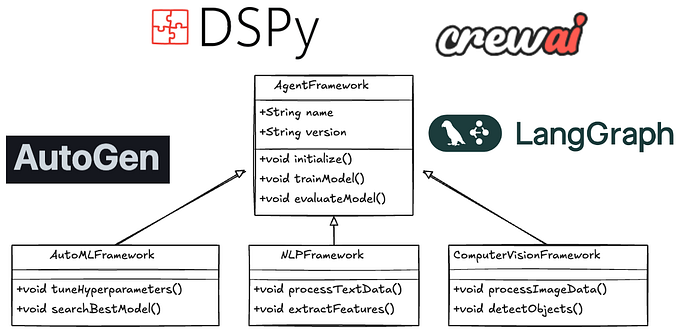

Furthermore, newer and better LLMs keep renewing the unrealistic hope among product owners that LLMs will one day solve everything for us. As a result, we are discarding many tried-and-true software engineering methods. For example, component support is at the center of any GUI framework — such as React JS or SwiftUI. In that world, we never need to code a date picker from scratch; there are many excellent plug-and-play implementations for us to choose from, allowing us to focus on more business-specific aspects of interaction. However, this feature is nowhere to be found in the tools commonly used to build chatbots today. Without component support, we have to build from scratch every time. It’s akin to discarding the rocket after its first use, inevitably leading to high costs for each CUI application.

Parting words

If users are willing to go through the trouble of learning how to use icons, menus, and buttons on your website to get what they want, they would surely adopt a more intuitive conversational interface where they can simply state what they want and get it. The meteoric rise of ChatGPT’s user base is a great testimony to that. But why have we not seen the widespread adoption of conversational experiences?

Product owner often underestimate the difficulties in building conversational user interfaces. Their design tends to only cover the happy path, thus often render resulting chatbot useless. Under this CUI classification, a happy-path-only CUI lacks error correction support. While it might work for most simple use cases, it is simply not usable for real scenarios where users will make mistakes during the interaction. We need to elevate the CUI to level 3 and beyond to gain enough user trust for them so they will stay long enough with the chatbot to get serviced.

So why do many product owners not aim for high-level CUIs to begin with? They lack the conceptual models and tools for building CUIs. Most existing CUI design and implementation are flow-based. To cover exponentially many conversational paths with this method is simply too expensive. Also conversational experiences must also align with business objectives and be developed within a reasonable budget. This suggests the need for a process that is both powerful enough for most use cases and easy for existing application teams to adopt. Luckily, a type based solution fits the bill.

In general, we should leverage established software development principles, such as separation of concerns, model-view-controller architecture, and declarative programming, as much as possible. We will demonstrate how we can greatly reduce the cost of building usable CUI using components as a fundamental concept, stay tuned.

References: