Dialog Understanding #7: Why Function Calling is Not the solution?

While it is possible to employ elaborate prompt engineering to make ChatGPT convert natural language task requests into structured representations of their meaning, the recent introduction of function calling API has significantly simplified this process. However, can ChatGPT’s function calling alone solve dialog understanding once and for all? Let’s find out.

So what is function calling anyways?

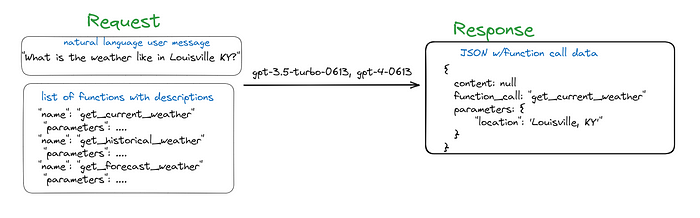

On the surface, OpenAI’s function calling appears to be a zero-shot implementation of dialog understanding. Given a set of function descriptions, OpenAI models with this feature will determine whether the user’s input implies calling one of these functions. If so, they generate a function object encoded in JSON, specifying which function to call, as well as the values users mentioned for its parameters. Upon receiving this JSON representation of function calling, your dialog management logic can decide what to do in cases such as missing values for required arguments. The OpenAI API can be invoked in the following way:

import openai

import json

openai.api_key = "" // your openai key here.

def getFunction(input, functions):

messages = [{"role": "user", "content": input}]

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo-0613",

messages=messages,

functions=functions,

function_call="auto", # auto is default, but we'll be explicit

)

return response["choices"][0]["message"].get("function_call")Notice that the same completion API endpoint is used, and the main difference is that you can now supply a list of JSON function objects to specify the names and descriptions of some functions, as well as the names and descriptions of their parameters. When the input utterance can be interpreted as triggering a certain function, the API will return a well-structured JSON object adhering to your custom schema, specifying both the name and arguments of the function.

Use case #1: simple food ordering

First, let’s try it with single function in the food ordering use case with the following message and function description:

foodOrdering = {

"name": "FoodOrdering",

"description": "Get the dish with its quantity",

# The slots in a skill

"parameters": {

"type": "object",

"properties": {

"dishName": {

"type": "string",

"description": "The name of the dish, e.g. french fries",

},

"quantity": {

"type": "string",

"description": "The quantity of this dish"

},

},

"required": [],

},

}

print(getFunction("Hey, can I get some chicken sandwiches please?", [foodOrdering]))will print this:

>{

> "arguments": "{\n \"dishName\": \"chicken sandwiches\"\n}",

> "name": "FoodOrdering"

>}As you can see, the parsing of the user input is successful, but it is not always reliable. For example, sometimes the model randomly fills the “quantity” slot with either “1” or “2” for the input “some chicken sandwiches.” This can increase the workload for the dialog management module. However, for food ordering, we can always use a confirmation to address this problem.

Use case #2: Missing parameters

What happens if user failed to mention the required parameters for the function? Let’s try the reservation:

function2 = {

"name": "MakeReservation",

"description": "Make Reservation",

"parameters": {

"type": "object",

"properties": {

"number": {

"type": "number",

"description": "number of people",

},

"date": {

"type": "string",

"description": "date of the reservation"

},

"time": {

"type": "string",

"description": "time of the reservation"

},

}

}

}

message2 = [

"Hi, I would like to make a reservation for a table of two at 2:00 pm tomorrow.",

"Hi, I would like to make a reservation for a table of two for tomorrow.",

"Hi, I would like to make a reservation for a table of two.",

"Hi, I would like to make a reservation for a table"]

for message in message2:

print(getFunction(message, [function0, function11, function2]))Interestingly, the number of parameters for which the user provides values significantly influences ChatGPT’s behavior here. It makes conversion only when enough parameters are filled by the user’s utterance. When not enough parameters are filled, it does not perform even if user clearly and explicitly requesting a reservation, evident by last two utterances above. This behavior is not acceptable for dialog understanding as it makes extremely difficult for implementing dialog management. Nonetheless, it might be suitable for tool use as a tool in building agents, which could be the target use case for this API’s design anyway.

Use case #3: user changes their mind

What happens if user change their mind on some of their choice?

function10 = {

"name": "SlotUpdate",

"description": "change the value",

# The slots in a skill

"parameters": {

"type": "object",

"properties": {

"newValue": {

"type": "string",

"description": "the new value",

},

"oldValue": {

"type": "string",

"description": "the old value",

},

}

}

}

function11 = {

"name": "SlotUpdateDish",

"description": "change the dish",

# The slots in a skill

"parameters": {

"type": "object",

"properties": {

"newDishName": {

"type": "string",

"description": "the new dish",

},

"oldDishName": {

"type": "string",

"description": "the old dish",

},

}

}

}

message1 = "Can I change fish sandwich to chicken sandwich, please?"But it seems that OpenAI models have a hard time if we use the generic version of SlotUpdate (function10):

> print(getFunction(message1, [function0, function10]))

< {

< "arguments": "{\n \"dishName\": \"chicken sandwich\"\n}",

< "name": "FoodOrdering"

< }But it worked when a concrete version of SlotUpdate (function11) is provided:

> print(getFunction(message1, [function0, function11]))

< {

< "arguments": "{\n \"newDishName\": \"chicken sandwich\",\n \"oldDishName\": \"fish sandwich\"\n}",

< "name": "SlotUpdateDish"

< }Why function calling did not solve dialog understanding?

Clearly, the function calling API is NOT a viable solution for dialog understanding. However, the problem is not solely about accuracy, as it can and will be improved. Instead, it needs to be designed for the dialog understanding use case and address the following very issue of users not supply complete information needed to call a function in one shot:

User: I like to get your house meal please.

Bot: What side do you perfer?

User: Onion Rings, thanks.

Bot: What about drink?- While function calling is appropriate for the first turn, it is completely inadequate for the second turn: “Onion rings, thanks”. To correctly understand this utterance, we need another API with specify both user utterance and the function in focus, along with the parameters specification.

- No matter how much improvement OpenAI makes to their function calling APIs, their model will still make mistakes. When these mistakes pose a high risk or have a high impact on businesses, we need a quick and easy way to address them, instead of waiting for the next round of fine-tuning.

- New product name might not be in both pre and post training of the LLMs, so a list of product names can potentially improve conversions. There needs to be an effective way to make use of such a business-specific list, ideally without resorting back to retraining the model.

Parting words

Using function descriptions alone, ChatGPT cannot fully address dialog understanding as shown above. That being said, the zero-shot text-to-JSON conversion capability with just an API specification certainly brings hope for a more developer-friendly way of building dialog understanding modules. How do we address these open issues while maintaining the same level of developer-friendliness? Read on.

Note: this write-up is based on the research done by SUNNYMAY MAY. Thanks.

References: