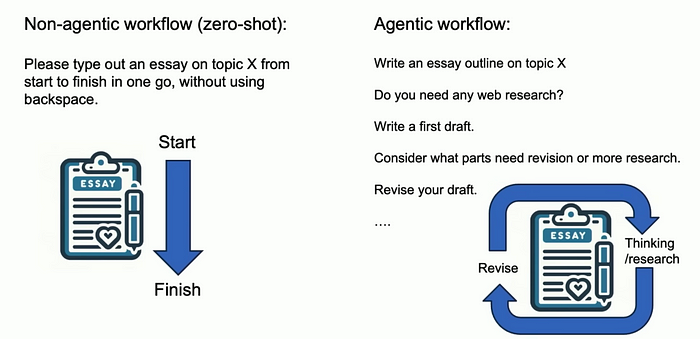

One of the new terms being thrown around is ‘agentic workflows.’ Everything is being reinvented by agentic workflows; even retrieval-augmented generation (RAG) now has a new variant called agentic RAG. (Is it really necessary?) What exactly is an agentic workflow? Let’s find out.

What is the key of being agentic, and why?

One of the main advocates for agentic workflow, Andrew Ng, has identified the following key design patterns: reflection, tool use, planning, and multi-agent collaboration. There is plenty of material detailing these patterns; here, we will focus on what motivates them in the first place.

- Reflection. No matter how much effort we put into engineering the prompt, an LLM is an imperfect problem solver, and it is bound to make some mistakes (commonly known as hallucinations). While it is hard to eliminate these errors altogether, we can generally improve its performance by using an LLM-based critic and selectively applying the feedback to regenerate solutions (potentially over multiple runs). Generally, as long as the critic is independently constructed, and both the generator and critic perform better than random, we will see an improvement.

- Tool use. Early on, LLM developers realized that relying only on a pre-trained transformer to generate output tokens is limiting, as LLMs do not have knowledge about events that occurred beyond the cutoff date of the dataset used for pretraining. To answer questions with up-to-date information, it is useful to use tools to do a web search first and pass the search results back to the LLM as additional input context for further processing. (Note that this is simply retrieval-augmented generation, or RAG, with a web corpus, although the RAG is typically used with a priviate collection of text).

- Planning/Multi-agent collaboration. It is useful to decompose the original larger problem into many smaller ones, which requires planning, solve them separately — each with a different agent — and then combine the component solutions (collaboration between agents is needed).

The theme of these patterns? Divide and conquer.

It should be clear that the common theme motivating these key agentic design patterns is none other than divide-and-conquer. Divide-and-conquer is a fundamental problem-solving technique widely used in software development due to its efficiency and scalability. It involves breaking down a complex problem into smaller, simpler subproblems, solving each subproblem independently, and then combining the solutions to solve the original problem.

In software development (and beyond), the divide-and-conquer strategy is used to solve all kinds of problems, including many core algorithms that have had a huge impact on our civilization:

- Sorting algorithms: Merge sort, quicksort, etc.

- Searching algorithms: Binary search

- Numerical algorithms: Fast Fourier transform (FFT)

- Computational geometry: Closest pair of points, convex hull

- Graph algorithms: Strassen’s matrix multiplication

It is a standard tool people use for solving problems for many reasons. First, breaking down a problem into smaller subproblems promotes modularity in software design, making it easier to understand, maintain, and test individual components. Additionally, when we decompose a problem into standard subproblems, it also helps with reuse, as different solutions can be built using many common components. Furthermore, these subproblems can be solved across multiple processors or computers simultaneously, making this approach suitable for handling large-scale problems and distributed systems.

What is next? A historical perspective.

Instead of using one large LLM to solve every problem, applying the divide-and-conquer principle in the age of LLMs also offers many practical benefits. For one, larger models are expensive in both training and inference. For many relatively simple problems, these larger LLMs can be overkill at best and impractical if the cost of the solution outweighs the value of the problem itself. Additionally, it is much easier to upgrade smaller LLMs one at a time, rather than updating all functionality at once. There are many more advantages as well.

Instead of figuring out how to apply divide-and-conquer to each problem from scratch, it is common for us to converge on a specific architectural design pattern for each important class of applications. For example, when building applications with graphical user interfaces, we have generally converged on the model-view-controller (MVC) pattern, a software architectural pattern that separates a GUI application into three interconnected components:

- Model: Represents the data and logic of the application. It encapsulates the business rules, data access, and other core functionalities.

- View: The user interface that displays the data to the user. It is responsible for rendering the model’s data in a visually appealing and interactive way.

- Controller: Handles user input and updates the model accordingly. It acts as an intermediary between the view and the model, coordinating their interactions.

Nowadays, most user-facing applications have two parts: a frontend and a backend. The Model component is located in the backend, where the application logic is implemented. The View component manages the look and feel, while the Controller component handles interaction logic. Together, they make up the frontend.

An architectural pattern captures the best practices of applying divide-and-conquer for its corresponding class of applications, thus it can be quite stable. For example, the MVC pattern was generally introduced by Trygve Reenskaug, a Norwegian computer scientist, in the early 1980s while he was working on the Smalltalk-80 programming language. It still serves as the basis for building all modern GUI applications.

Chatbots, agents, and copilots are applications with conversational user interface (CUI). But unlike their counterparts in web app and mobile apps, we do not yet even talk about their architectural patterns, as everyone is still busy exploring the limits of these LLMs in term of its functionalities. But as we start to make these user facing LLM application available in other languages, we will need to answer some interesting questions. For example, how do we deliver consistent user experience regardless what language users use to interact? We might end up something like MVC pattern.

Parting words

While it is useful to create new terms to capture the novelty of emerging technology and generate excitement, it is also important to trace their engineering roots to put these new concepts into perspective. In this blog, we argue that the agentic workflow is simply a direct application of the divide-and-conquer principle in the age of LLM applications. This new perspective, along with tool use (or data-to-text conversion and vice versa), enables a hybrid approach: we can decompose problems as before, selecting the right component for the job, whether it’s an LLM-based implementation (known as agents) or traditional coding. In the end, the most important thing is solving new problems and building new experiences.